Marc Deisenroth

Google DeepMind Chair of Machine Learning and Artificial Intelligence

University College London

Biography

Professor Marc Deisenroth is the Google DeepMind Chair of Machine Learning and Artificial Intelligence at University College London. He is also a Research Director at Google DeepMind. Marc co-leads the Sustainability and Machine Learning Group at UCL. His research interests center around data-efficient machine learning, probabilistic modeling and autonomous decision making with applications in climate/weather science, nuclear fusion, and robotics.

Marc was Program Chair of EWRL 2012, Workshops Chair of RSS 2013, EXPO Chair at ICML 2020, Tutorials Chair at NeurIPS 2021, Program Chair at ICLR 2022, and Program Chair at NeurIPS 2026. He serves on the Scientific Advisory Boards of the National Oceanography Centre as well as the United Nations University Global AI Network. He received Paper Awards at ICRA 2014, ICCAS 2016, ICML 2020, AISTATS 2021, and FAccT 2023. In 2019, Marc co-organized the Machine Learning Summer School in London.

In 2018, Marc received The President’s Award for Outstanding Early Career Researcher at Imperial College. He is a recipient of a Google Faculty Research Award and a Microsoft PhD Grant.

In 2018, Marc spent four months at the African Institute for Mathematical Sciences (Rwanda), where he taught a course on Foundations of Machine Learning as part of the African Masters in Machine Intelligence. He is co-author of the book Mathematics for Machine Learning, published by Cambridge University Press.

Research Expertise

Machine Learning: Data-efficient machine learning, Gaussian processes, reinforcement learning, Bayesian optimization, approximate inference, deep probabilistic models, geo-spatial models

Environment and Sustainability: Data assimilation, data-driven forecasting models, renewables

Robotics and Control: Robot learning, legged locomotion, planning under uncertainty, imitation learning, adaptive control, robust control, learning control, optimal control

Signal Processing: Nonlinear state estimation, Kalman filtering, time-series modeling, dynamical systems, system identification, stochastic information processing

Key Publications

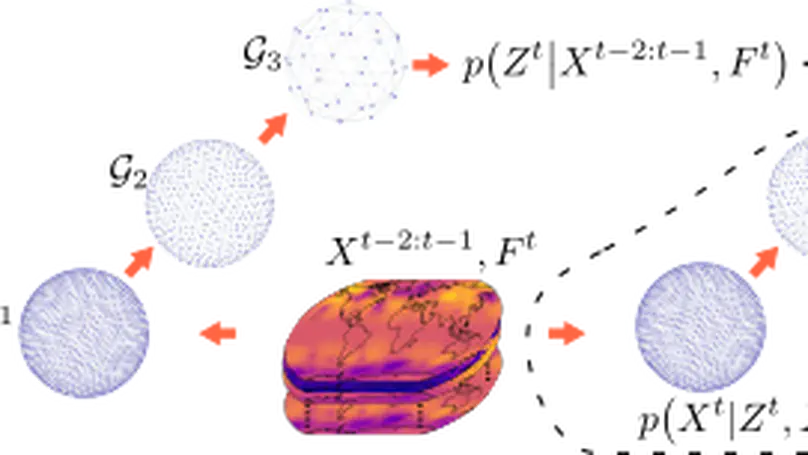

In recent years, machine learning has established itself as a powerful tool for high-resolution weather forecasting. While most current machine learning models focus on deterministic forecasts, accurately capturing the uncertainty in the chaotic weather system calls for probabilistic modeling. We propose a probabilistic weather forecasting model called Graph-EFM, combining a flexible latent-variable formulation with the successful graph-based forecasting framework. The use of a hierarchical graph construction allows for efficient sampling of spatially coherent forecasts. Requiring only a single forward pass per time step, Graph-EFM allows for fast generation of arbitrarily large ensembles. We experiment with the model on both global and limited area forecasting. Ensemble forecasts from Graph-EFM achieve equivalent or lower errors than comparable deterministic models, with the added benefit of accurately capturing forecast uncertainty.